The 2026 Online Exposure: Experiences of Extreme or Illegal Content in Aotearoa report from the Classification Office provides a comprehensive look at the prevalence and impact of extreme or illegal online content within New Zealand. This report examines the experiences of extreme or illegal content among various age groups and how it affects the population. Based on a representative survey, it reveals that two-thirds of the population have encountered potentially objectionable material, such as graphic violence and child exploitation. The findings highlight a significant gap in public understanding regarding which types of content are strictly illegal to possess or share. Younger adults are identified as the most frequent victims of accidental exposure, often through social media algorithms, leading to substantial psychological distress for many. Ultimately, the document emphasises the need for enhanced public education and clearer reporting pathways to help citizens navigate and mitigate digital harms.

Māori and Pacific Exposure to extreme or illegal content

While participants across all age and gender groups reported seeing graphic or extreme content, exposure is significantly higher among younger adults and tends to be less common with older age groups.

Exposure to extreme content was reported across all ethnic groups, but was more common for Māori: 79% had seen at least one type of content, 54% had seen three or more, 31% five or more, and 14% seven or more.

Results were also higher for Pacific people, although these figures are not statistically significant due to the smaller sample size (the exception being exposure to seven or more content types: 19%).

Pacific people and Māori were more likely encounter this content more often: 18% of Māori had seen content at least a few times a month, and 49% at least a few times in the past year. Likewise, 21% of Pacific people had seen content at least a few times a month, and (while not statistically significant) 40% at least a few times in the past year.

Experience of harm from exposure was also more common for Pacific people (29%), and both Pacific (14%) and Māori (11%) participants were more likely than the overall population to rate their experience as ‘very’ or ‘extremely’ harmful.

Conclusion

Social media algorithms appear to be inadvertently funnelling harmful content toward younger users and potentially toward Māori and Pacific users at higher rates. It reflects how content moderation and recommendation systems inadequately account for cultural context or fail to protect vulnerable groups equally.

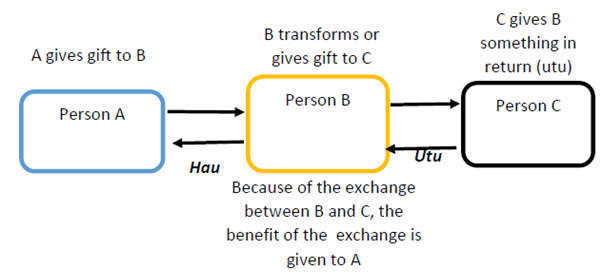

The disparity in harm experienced by Māori and Pacific communities suggests this isn’t merely about individual choices or content consumption patterns. Rather, it reflects systems that inadequately protect some New Zealanders more than others, demanding urgent attention to both technological infrastructure and cultural responsiveness in our approach to online safety.

Recommendations

Addressing this issue requires both immediate interventions and longer term systemic reforms.

Short term

Authorities such as Net Safe, Police, DIA, etc must establish clear, culturally appropriate reporting pathways that Māori and Pacific communities genuinely trust and can easily access.

Social media platforms should be mandated to audit their algorithms for disparate impact on ethnic groups, ensuring their systems aren’t inadvertently amplifying harmful content to vulnerable populations and share the results. Additionally, targeted public education campaigns are essential, particularly for rangatahi (Māori youth) and Pacific young people, to raise awareness about what content is illegal and how to report it effectively.

Longer term systemic reforms

Platforms operating in New Zealand must be required to provide transparency about content moderation decisions affecting New Zealand users, allowing for accountability and oversight.

Culturally informed mental health support services need to be developed specifically for those harmed by exposure to objectionable content, recognising that trauma responses may differ across cultural contexts.

The Classification Office and NetSafe should receive strengthened resources to work directly with Māori and Pacific community organisations, ensuring that protective measures are culturally responsive and community led. Furthermore, legislation should be introduced that prevents platforms from algorithmically amplifying harmful content to high risk groups.