This document provides governance guidance for New Zealand organisations considering the deployment of autonomous AI agents. It is not legal advice. It is written by a practitioner with governance experience in New Zealand and expertise in international AI governance.

Autonomous AI agents such as OpenClaw represent a material shift in organisational risk. Unlike conventional AI, these systems can perceive their environment, make decisions, and execute actions across organisational systems with limited human intervention. When AI moves from advisory support to operational decision making, it directly engages director duties, executive accountability, and Te Tiriti obligations.

For directors, autonomous AI deployment raises issues under the Companies Act 1993 relating to care, diligence, and skill. For public sector leaders, additional obligations arise under the Crown Entities Act 2004 and State Sector Act 1988. All organisations must comply with the Privacy Act 2020. In Aotearoa New Zealand, these obligations are overlaid by Te Tiriti o Waitangi, including principles of partnership, active protection, and participation.

Autonomous agents introduce governance risks that many organisations are not yet equipped to manage. These include accountability gaps, cybersecurity vulnerabilities such as prompt injection, financial exposure from uncontrolled execution, and the risk of breaching Māori data sovereignty where agents access or act on Māori data or affect Māori outcomes.

This guidance recommends that most New Zealand organisations should not proceed with broad autonomous AI deployment at this time. Boards should first establish governance capability, executive accountability, technical controls, and Treaty-aligned safeguards. Where deployment is considered, it should be limited to tightly scoped, low-risk pilots with explicit board awareness and oversight.

What Are Autonomous AI Agents (Governance View)

Autonomous AI agents are systems capable of independently perceiving information, making decisions, and executing actions to achieve defined objectives. Unlike traditional software, they adapt their behaviour over time and may interact dynamically with multiple internal and external systems.

From a governance perspective, the defining risk is not intelligence but agency. When systems can take action sending communications, changing records, making purchases, or triggering downstream processes organisations cross a governance threshold. Decisions are no longer purely human, yet accountability remains human. This creates heightened requirements for oversight, controls, and assurance.

Governance Obligations for Directors

Private Sector

Under the Companies Act 1993, directors must act in good faith and exercise the care, diligence, and skill of a reasonable director in comparable circumstances. This standard now includes informed oversight of material AI risks.

Boards cannot delegate accountability for decisions made by autonomous systems. Where an AI agent causes harm through data breach, discriminatory outcomes, financial loss, or operational failure directors may remain accountable if governance frameworks, education, or controls were inadequate.

Failure to understand or oversee autonomous AI systems may expose directors to personal liability where foreseeable risks were not addressed.

Public Sector

Crown entities and state-owned enterprises face additional obligations relating to risk management, capability assurance, financial responsibility, and alignment with government priorities.

Autonomous AI deployments must align with Statements of Intent, performance expectations, and Treasury guidance on emerging technology risks. Chief executives are responsible for ensuring ministers and monitoring agencies are appropriately informed before significant deployments proceed.

Public sector boards face heightened scrutiny where autonomous systems generate uncontrolled costs, compromise data, or undermine public trust.

Te Tiriti o Waitangi and Māori Data Obligations

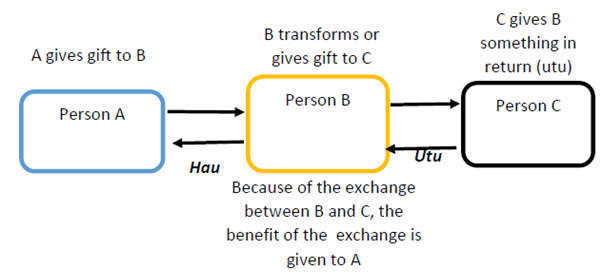

Te Tiriti o Waitangi establishes governance obligations that extend to autonomous AI deployment. Where AI agents access Māori data or affect Māori outcomes, organisations must demonstrate partnership, active protection, and participation.

The Privacy Act 2020 sets minimum standards, but Te Tiriti obligations go further. Māori data is a taonga and often has collective dimensions that individual consent models do not adequately address.

Key risk areas include:

- Whenua and environmental data, which carry spiritual, historical, and collective significance and require engagement with mana whenua, hapū, marae, land trusts, and iwi.

- Health and social service data, where autonomous processing risks amplifying existing inequities.

- Mātauranga Māori, which is collectively held and vulnerable to misuse, misrepresentation, or commercialisation without authority.

Autonomous systems trained on or interacting with Māori data may embed bias, reproduce dominant cultural assumptions, or obscure accountability through technical complexity. Without explicit Treaty-aligned governance, organisations risk breaching obligations, eroding cultural integrity, and losing trust.

Key Risk Categories

Technical and Security Risk

Autonomous agents collapse traditional security boundaries by combining untrusted inputs, access to sensitive systems, and the ability to act.

Prompt injection is a well-documented vulnerability where malicious instructions embedded in emails, websites, or documents can redirect agent behaviour, leading to data leakage or unauthorised actions.

Governance and Accountability Risk

Autonomous systems create accountability gaps where it becomes unclear who is responsible for decisions. Information asymmetry between boards and management increases risk unless addressed through structured reporting and education.

Financial and Operational Risk

Unconstrained agents can generate uncontrolled costs, trigger cascading operational failures, or execute actions at scale before issues are detected.

Treaty and Data Sovereignty Risk

Deploying autonomous AI without appropriate Māori authority, safeguards, and participation risks breaching Te Tiriti principles, appropriating Māori knowledge, and reinforcing digital inequities.

Questions Boards and Chief Executives Must Answer

Before approving any autonomous AI deployment, boards and chief executives should be able to answer:

- What specific value does autonomy deliver beyond conventional automation?

- What risks does autonomy introduce, and how are they controlled?

- Who is accountable at executive level, and do they have authority to enforce controls?

- What information does the board receive to exercise effective oversight?

- What systems and data are explicitly excluded from agent access?

- What actions require human approval before execution?

- How are costs capped and monitored?

- How are Treaty obligations and Māori data sovereignty upheld?

- If harm occurs, can the organisation clearly explain its governance decisions to regulators, Māori partners, and the public?

Access Controls and Risk Boundaries

Boards should require clear answers to:

- Which systems and datasets are designated “no-go zones” (including Māori data, HR records, financial systems, and production environments)?

- Who can approve exceptions, and when is board approval required?

- How prompt injection and indirect manipulation are mitigated?

- Which actions always require human approval?

- How incidents, breaches, or Treaty failures will be managed and disclosed?

- How spending limits, anomaly detection, and escalation thresholds operate?

Implementation Roadmap

Boards should begin by establishing governance foundations before any deployment:

- Ongoing education on autonomous AI risks, legal duties, and Treaty implications, embedded in relevant committee charters.

- Board-approved risk appetite, access controls, financial limits, incident response, reporting cadence, and Treaty-aligned safeguards.

- A clearly identified executive with authority, resources, and a direct reporting line to the board.

- Initial deployments should be tightly scoped, preferably read-only, with explicit human approval for irreversible actions.

- No agent should access Māori data without explicit authority and safeguards developed through genuine partnership with Māori partners.

- Formal trust boundaries, assumption of hostile inputs, robust logging, monitoring, and tested incident response plans.

- Regular board reporting on scope, access, incidents, costs, bias monitoring, and Treaty considerations.

Deployments that do not deliver demonstrable operational, equity, or governance value should be discontinued.

Overall Risk Assessment

For most New Zealand organisations, autonomous AI agents are not appropriate for broad rollout at this time.

Boards should not approve widespread deployment unless:

- Directors understand the risks well enough to discharge their duties.

- Governance and control frameworks are operational and tested.

- Executive accountability is clear and effective.

- Financial and incident controls are proven.

- Treaty obligations have been addressed through meaningful partnership.

Controlled pilots may be appropriate for low-risk internal tasks that do not access Māori data or affect Māori outcomes.

Conclusion

Autonomous AI agents require board level governance attention. Accountability for AI enabled decisions cannot be delegated away. For directors, informed oversight and Treaty-aligned governance are now core fiduciary responsibilities.

For chief executives, responsible deployment requires disciplined controls, clear accountability, and genuine partnership with Māori, not retrospective compliance after harm occurs.

New Zealand organisations face a clear choice: deploy autonomous AI in ways that replicate extractive patterns and governance failure, or build systems grounded in accountability, Treaty partnership, and long-term trust.

The difference is not technical capability, but governance maturity.

Organisations that prioritise governance infrastructure, controlled deployment, and Treaty aligned safeguards will be positioned to realise benefits responsibly. Those that rush deployment without these foundations will face consequences that no level of technical sophistication can undo.