Leaking of sensitive corporate and government data via Generative AI such as ChatGPT, Copilot, Gemini, Claude, and Perplexity is an ongoing risk with many New Zealand companies and the New Zealand government who lack Artificial Intelligence (AI) skills such as AI governance and AI Security.

All data that is inputted into an AI models will be retained and the AI will use that inputted data to learn from. This is called AI memorisation, that is, the models can generate verbatim copies of certain parts of their training data.

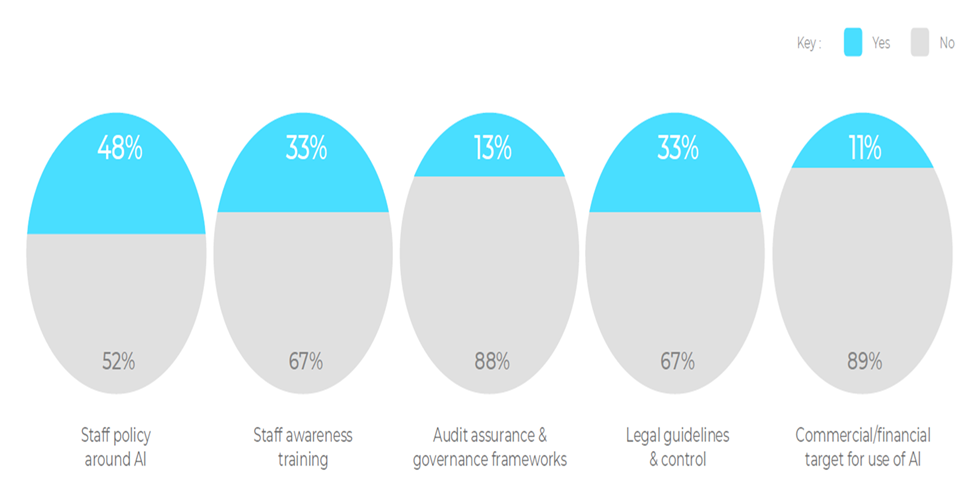

DataCom 2024 AI attitudes in New Zealand showed 67% of companies stated security as a key concern. Yet, most companies have a lack of AI training as does most New Zealand government groups in this area.

Recent international research From Payrolls to Patents: The Spectrum of Data Leaked into GenAI (2024) shows some alarming rates of Data Leakage:

- 63.8% of ChatGPT users used the free version with 53.5% of sensitive prompts entered into it

- 5.64% was sensitive code like Access Keys and proprietary source code

- 8.5% included sensitive data which was made up of the following:

45.77% was sensitive data about customers, including billing details, customer reports, and customer authentication.

26.83% of that was Employee data including payroll and employment records

14.88% was legal and finance data such as Mergers and Acquisitions, Investment Portfolios and Pipeline Data.

6.88% was security policies.

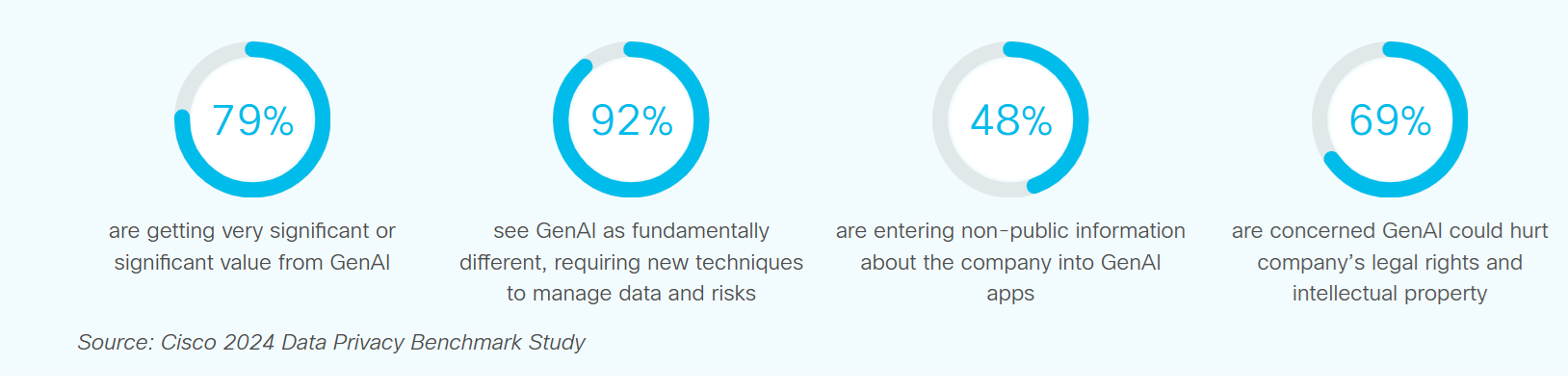

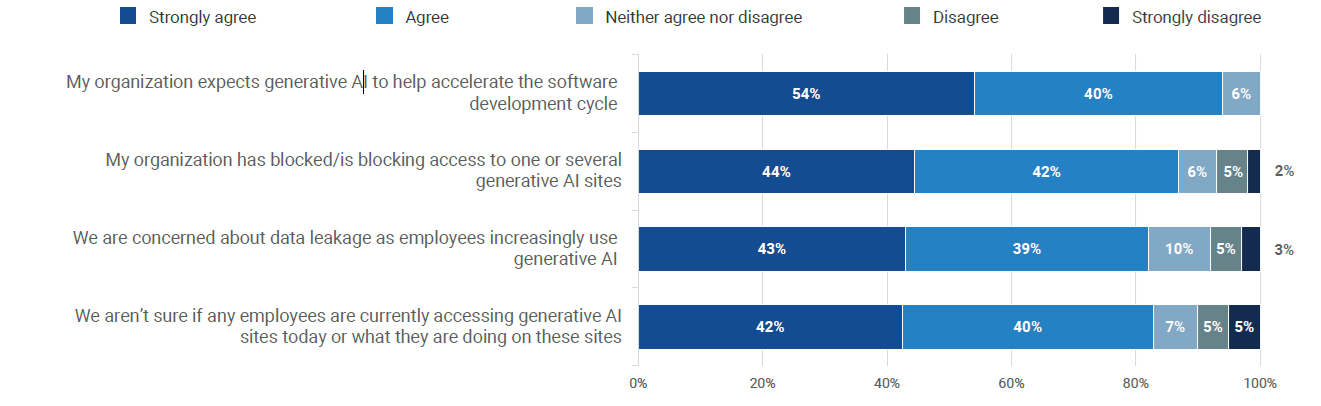

The Cisco 2024 Data Privacy Benchmark Study included organizations concerns with generative Artificial Intelligence (GenAI). It shows a strong trend of concern for data privacy and brand reputation and notes that traditional privacy of data is fundamental different with AI and requires new techniques.

This reflects the 2023 announcement from Samsung Electronics that they banned the use of ChatGPT and other AI-powered chatbots by its employee was prompted by the discovery of an accidental leak of sensitive internal source code by an engineer who uploaded it to ChatGP. Locally here in New Zealand, many law firms have banned the use of Generative AI and or have dedicated computers that are not on the network. The New Zealand Law Society have recognised the risks and have created their own guidelines Generative AI guidance for lawyers and Microsoft have created the publication Generative AI for Lawyers.

TechTarget’s Generative AI for cybersecurity reveals the widespread concerns from cyber security experts who increasingly deploy widespread policies of blocking Generative AI and have concerns about Data Leakage.

AI Agents and training data

In 2025 the new forecast is the widespread introduction and adaption of AI Agents in both New Zealand and internationally that many businesses will likely use for productivity and cost reductions, thus creating more data leakage risks.

Microsoft CEO Satya Nadella: AI Agents Will Transform SaaS as We Know It ,that “Artificial intelligence (AI) agents will disrupt SaaS (software-as-a-service) models with a lot of backend business logics being automated by agents.

An obvious solution for all organisations and governments is to create an AI Usage Policy and ensure that they have an AI governance group with a direct line of communications and responsibility to senior management and board members. AI Usage Policies are effective, thorough and when end users are educated about the policy, they are efficient at preventing data leakage. This is very similar to when the Internet was rolled out in New Zealand, we had to create email and data policies for exactly the same reasons as we need to now for AI.

It should be noted that most social media, including LinkedIn and X, and all Generative AI will by default retain your data for training and learning. Users must be vigilant and check the Privacy Settings and opt out of their data being used and retained. Noting that not all Generative AI will allow you to opt out. For a number of options and details about various Generative AI check out Mozilla Foundation. These steps to opt out could form a part of your AI Usage policy.

Māori

For marae, iwi, hapū and Māori organisations, data leakage involves the same issues as others, but also risks any traditional knowledge that is contained within your organisation such as whakapapa: of people, the environment, carvings and photos, waiata, karakia and anything else that a person might enter into the AI.

I have seen an ever increasing amount of mātauranga appear and become refined with AI that is not online. That suggests to me that Māori and Māori groups are using AI with mātauranga Māori and not realising that the AI uses the chat data and learns from it.

Conclusion

Using international best AI Governance practices is now essential for any New Zealand organisation, while Māori organisations have added issues to consider with their traditional knowledge. It has become too common that some people are cashing in on this new technology with no technical experience and for some technical experts to claim to be AI and Data governance experts. When selecting a company or a person, ask for their credentials.