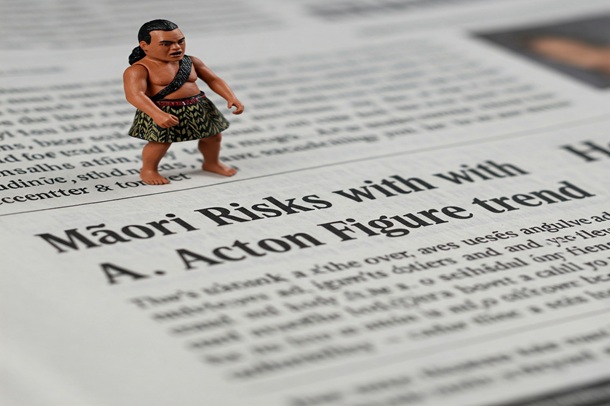

The AI action figure trend, where users generate personalised or stylised figures using AI tools (like custom avatars, toy-like images, or even physical 3D printed models based on AI renderings) carries several risks, particularly around privacy, ethics, cultural appropriation, and deepfake potential.

From a Māori perspective, the AI action figure trend presents serious risks associated with tino rangatiratanga, mātauranga Māori, whakapapa, and cultural appropriation.

Whakapapa Exploitation

AI systems capture facial features and visual markers tied to whakapapa. When uploaded to foreign platforms, this can result in loss of control, breach of tapu, and unconsented use. AI models using personal images can capture features linked to whakapapa—such as moko, facial structures, or iwi/hapū aesthetics. When this data is uploaded to foreign or corporate AI platforms, it risks being extracted, stored offshore, and used without context or consent.

- Digitising whakapapa:

- Whakapapa as Data: Treating identity data as a digital commodity undermines the sacredness of whakapapa and our responsibility as kaitiaki of that information.

- Suggested protective action: Ensure any engagement with image-based AI includes protections for whakapapa and adheres to Māori Data Sovereignty principles.

Mātauranga Māori Misappropriation

Taonga such as moko kauae, kākahu and pounamu are being stylised as digital accessories, stripping cultural meaning and breaching tikanga.

Eurocentric AI models often misrepresent Māori features, reinforcing digital erasure and stereotypes.

- Stylisation of taonga: AI-generated action figures might incorporate taonga like moko, kākahu, tiki, or pounamu as mere aesthetic choices removing their cultural, spiritual, and whakapapa significance.

- Loss of control over imagery: Once generated, these figures can be reused, replicated, or modified inappropriately, including being turned into toys or memes without tikanga or respect for tapu elements.

- Suggested protective action: Call for cultural safety frameworks such as WAI 262 and kaitiaki-led oversight on how AI tools use taonga or culturally encoded imagery.

Cultural Commodification

- Turning Māori identity into a product: Stylised avatars can trivialise Māori appearance and culture, especially if made by or for non-Māori. It’s a modern form of digital appropriation, turning Indigenous presence into something consumable.

- Outsider platforms profiting: AI companies can profit from Māori images and styles without any acknowledgment, benefit sharing, or consultation with Māori communities.

- Suggested protective action: Continue to raise Māori cultural IP protections, in particular that are specific to generative AI and avatars to prevent digital appropriation.

Rangatiratanga over Data (Data Sovereignty)

Māori images and likenesses are stored in offshore systems with no adherence to Māori governance or Te Tiriti principles. Rangatahi may see distorted or beautified versions of themselves, creating long-term identity and data risks.

- No control over where data goes: Most AI platforms are offshore and unregulated and ignore Te Tiriti and Māori data governance principles. This undermines Māori authority over how our images and data are stored, trained on, and used.

- Te Tiriti obligations ignored: These trends often operate outside the scope of Crown-Māori relationships, breaching Te Tiriti principles like partnership and protection.

- Suggested protective action: Mandate that platforms engaging with Māori data adhere to Te Tiriti and Māori Data Sovereignty principles and provide transparent data lifecycle disclosures.

Bias & Misrepresentation/Erasure

- Many AI models don’t “understand” Māori features, leading to Eurocentric outputs or distorted renderings of Māori appearance, contributing to erasure or misrepresentation.

- Underrepresentation of Māori faces or features in AI training sets leads to outputs that can be offensive, inaccurate, or completely off-mark.

- Commodification of Identity: Turning a person (or culture) into a stylized toy-like figure can be seen as dehumanizing or trivializing.

- Suggested protective action: Support the creation of Indigenous and Māori led AI datasets and representation models that reflect authentic, diverse Māori realities.

Risks to Tamariki and Rangatahi

- Identity distortion: Māori youth engaging with AI avatars may see stylised versions of themselves that reinforce western beauty standards or misrepresent their heritage.

- Long-term data footprint: Tamariki uploading their images are unknowingly contributing to lifelong digital trails, often with no understanding of where that data will go or how it will be used in the future.

- Suggested protective action: Develop educational tools and digital literacy campaigns grounded in kaupapa Māori to equip rangatahi with informed consent skills.

Tikanga and Tapu Violations

AI generated figures can appear in culturally unsafe contexts (e.g., adult content, violent games), especially when moko or Māori symbols are misused.

- Image use outside of tikanga: AI avatars can end up in digital spaces that breach tikanga, e.g., digital urupā, avatars used in games or media without karakia or cultural protocol.

- Moko being altered or used wrongly: Moko is a visual language of whakapapa. AI stylisations can displace this meaning and even reproduce moko on people without the right to wear them.

- Suggested protective action: Establish digital tikanga guidelines and platform accountability requirements in line with iwi and hapū protocols.

In addition to Te Ao Māori concerns, there are a number of other risks that impact everyone, including:

Privacy Risks

- Data Collection: Many AI tools collect and store facial data or personal identifiers. This data can be reused, sold, hacked, or leaked.

- Facial Recognition Abuse: Uploading your image to AI platforms can feed into facial recognition training datasets without your consent.

- Identity Theft: AI-generated figures can be realistic enough to be repurposed for impersonation or scams (especially if linked to your online presence).

Deepfake & Misinformation Risks

- Manipulation of Likeness: Once your image is in the system, it could be altered into inappropriate or offensive contexts (e.g., political propaganda, pornographic deepfakes).

- Malicious Use: AI-generated personas can be used in fake news, phishing, or fraud by mimicking real individuals.

Generative IP Issues

- Ownership: If an AI creates a figure based on your image, who owns the result? Often, the platform retains rights to the images, raising IP concerns.

- Derivative Works: Action figures based on celebrities, Indigenous characters, or traditional symbols could infringe on copyrights or cultural IP.

Social Risks

- Unrealistic Expectations: Action figures tend to stylize or “beautify” people, reinforcing unattainable body/image ideals.

- Youth Vulnerability: Children and teens might be particularly vulnerable to overexposure, identity play, or bullying based on AI-generated versions of themselves.

Summary:

The AI action figure trend is not a neutral technological development, it is a site of potential cultural harm, digital colonisation, and Treaty breaches. Proactive, Māori led policy is required to safeguard Māori rights, identity, and sovereignty in the age of generative AI.

The AI action figure trend clashes with core Māori values: mana, tapu, whakapapa, and kaitiakitanga. Without proper Māori Data Governance, consultation, it risks being another front where Māori identity is misused, misrepresented, and monetised without mana or consent.

Disclaimer: Image created using Google Gemini with the prompt “create an image of Māori risks with AI Action Figure Trend.” ChatGPT for text was largely used to manipulate notes and expand them.